This paper introduces MVDiffusion, a simple yet effective method for generating consistent multi-view images from text prompts given pixel-to-pixel correspondences (e.g., perspective crops from a panorama or multi-view images given depth maps and poses).

We show the capacity of MVDiffusion on two challanging multi-view image generation tasks: 1) panorama generation and 2) multi-view depth-to-image generation.

Examples results of panorama generation from text prompts using MVDiffusion. Check out our online demo to generate panorama images using your own descriptions.

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

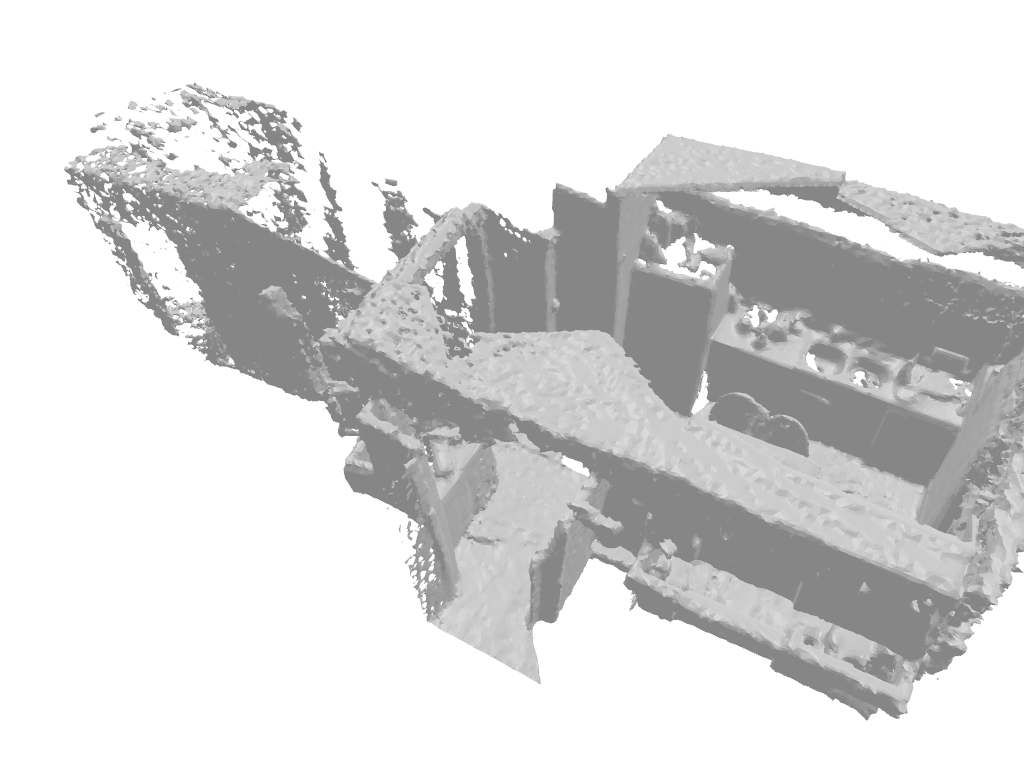

Given a sequence of depth maps from a raw mesh, MVDiffusion can generate a sequence of RGB images while preserving the underlying geometry and maintaining multi-view consistency. The generation results can be further exported to a textured mesh. Check out more results in the gallery page.

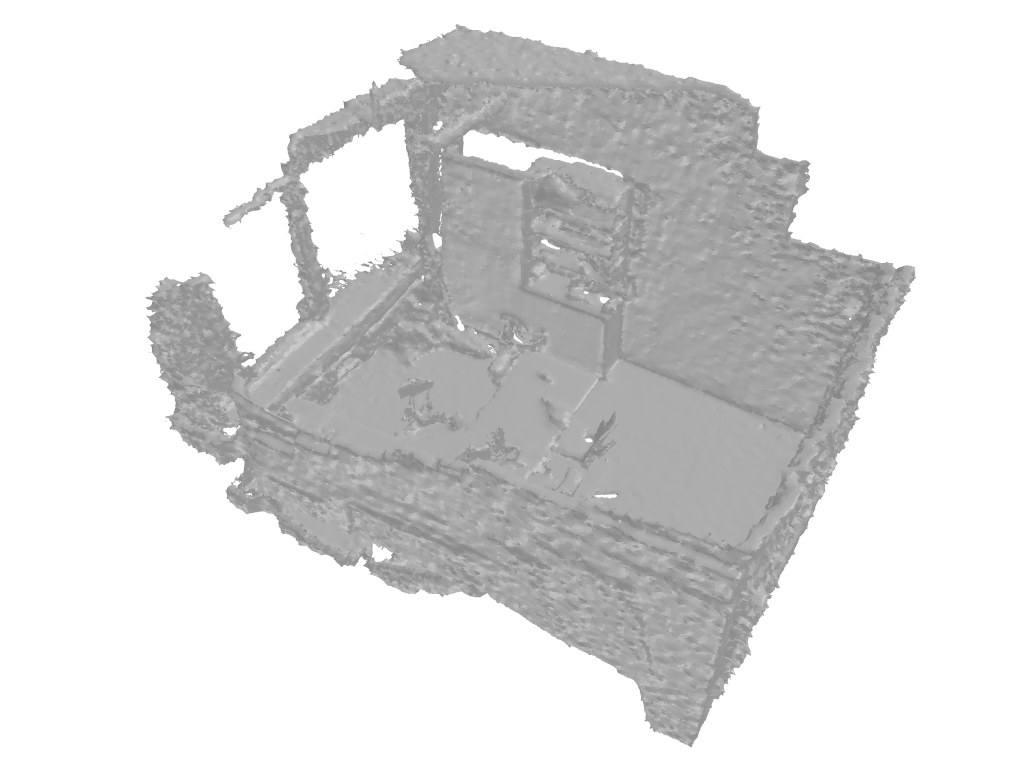

Mesh w/o texture

Input depth | Pred texture

Prompt: An office with a computer desk, a bookcase, a couch, chairs and a trash can. Two monitors and a keyboard are on the desk. Couches is sitting next to the desk.

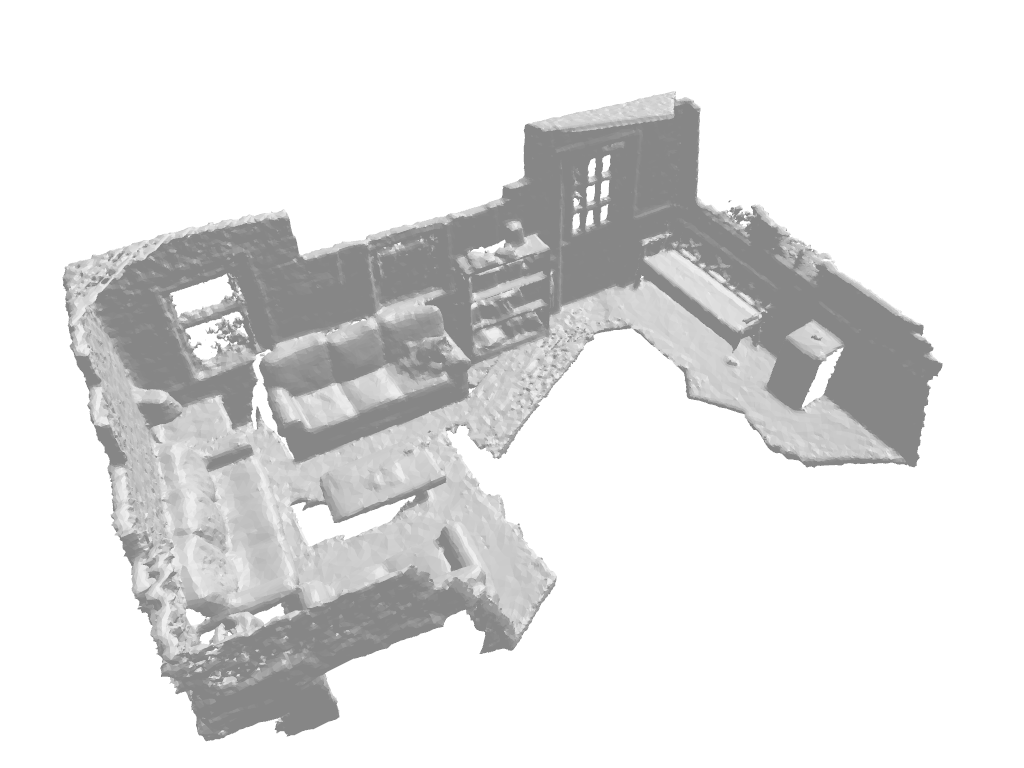

Mesh w/o texture

Input depth | Pred texture

Prompt: A living room with multiple couches and a coffee table. A wooden book shelf filled with lots of books next to a door. A white refrigerator sitting next to a wooden bench.

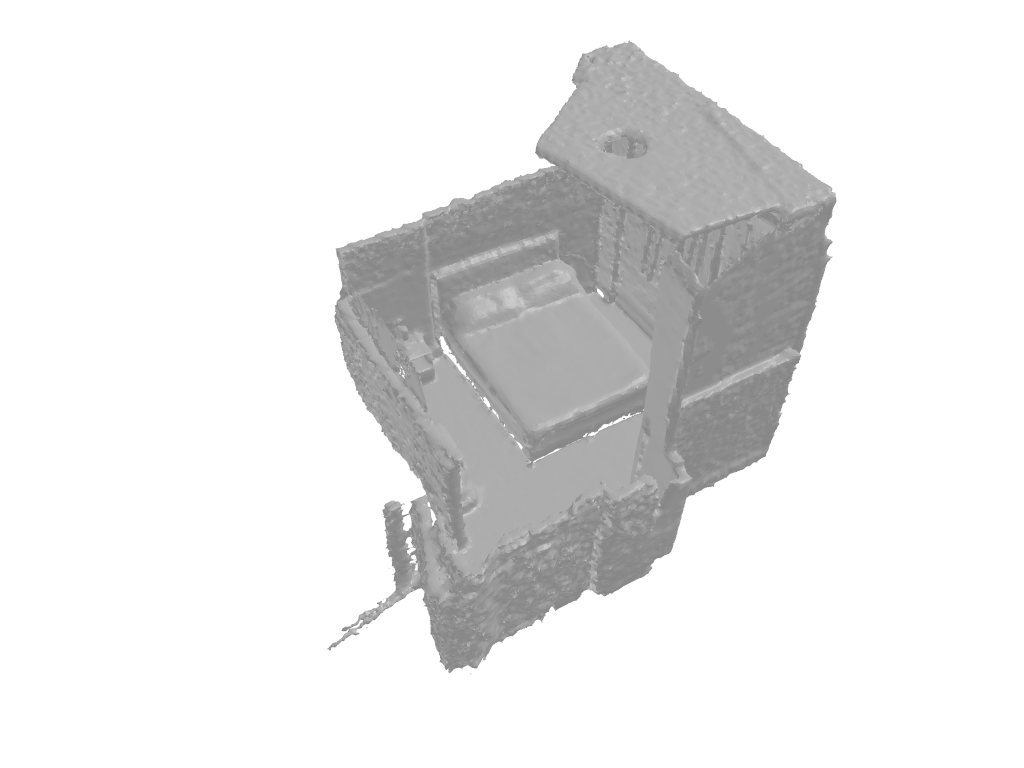

Mesh w/o texture

Input depth | Pred texture

Prompt: A bed with a blue comforter and a vase with purple flowers. Two backpacks are sitting on the floor next to the bed. A window with a curtain is next to the bed.

Mesh w/o texture

Input depth | Pred texture

Prompt: A white stove top oven and a white refrigerator freezer sitting inside of a kitchen. The kitchen with a sink, dishwasher, and a microwave. A wooden table and chairs in the kitchen.

@article{Tang2023mvdiffusion,

author = {Tang, Shitao and Zhang, Fuyang and Chen, Jiacheng and Wang, Peng and Furukawa, Yasutaka},

title = {MVDiffusion: Enabling Holistic Multi-view Image Generation with Correspondence-Aware Diffusion},

journal = {arXiv},

year = {2023},

}

This research is partially supported by NSERC Discovery Grants with Accelerator Supplements and DND/NSERC Discovery Grant Supplement, NSERC Alliance Grants, and John R. Evans Leaders Fund (JELF). We thank the Digital Research Alliance of Canada and BC DRI Group for providing computational resources.